Intro – feedback is key

Although speech recognition in Mixed Reality apps is very good, sometimes the best recognition fails, or you slightly mispronounced something. Or the command you just said is recognized, but not applicable in the current state. Silence, nothing happens and you wonder - did the app just not understand me, is the response slow or what? The result is always undesirable – users wait for something to happen and nothing does. They start to repeat the command and halfway the app executes the first command after all, or even worse – they start start shouting, which makes for a quite embarrassing situation (both for user and bystanders). Believe me, I’ve been there. So – it’s super important to inform your Mixed Reality app’s user that a voice command has been understood and is being processed right away. And if you can’t process it, inform the user of that as well.

What kind of feedback?

Well, that’s basically up to you. I usually choose a simple audio feedback sound – if you have been following my blog or downloading my apps you are by now very familiar with the ‘pringggg’ sound I use in every app, be it an app in the Windows Store or one of my many sample apps on GitHub. If someone uses a voice command that’s not appropriate in the current context or state of the app, I tend to give some spoken feedback, telling the user that although the app has understood the command, can’t be executed now and if possible for what reason. Or prompt for some additional action. For both mechanisms I use a kind of centralized mechanism that uses my Messenger behaviour, that already has played a role in multiple samples.

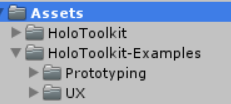

Project setup overview

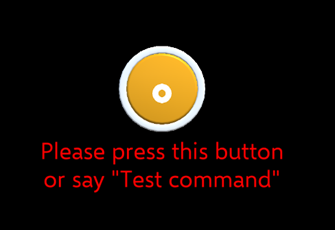

The hierarchy of the project is as displayed below, and all is does is showing the user interface on the right:

If you say “Test command”, you will hear the “pringggg” sound I already described, and if you push the button the spoken feedback “Thank you for pressing this button”. Now this is rather trivial, but it only serves to show the principle. Notice, by the way, the button comes from the Mixed Reality Toolkit examples – I described before how to extract those samples and use them in your app.

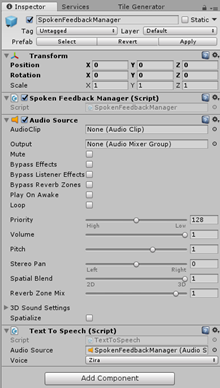

The Audio Feedback Manager and Spoken Feedback Manager look like this:

The Audio Feedback Manager contains an Audio Source that just contains the confirmation sound, and a little script “Confirm Sound Ringer” by yours truly, which will be explained below. This sound is intentionally not spatialized, as it’s a global confirmation sound. If it was spatialized, it would also be localized, and the user would be able to walk away from confirmation sounds or spoken feedback, which is not what we want.

The Spoken Feedback Manager contains an empty Audio Source (also not spatialized), a Text To Speech Script from the Mixed Reality Toolkit, and a the “Spoken Feedback Manager’ script also by me.

ConfirmSoundRinger

using HoloToolkitExtensions.Messaging;

using UnityEngine;

namespace HoloToolkitExtensions.Audio

{

public class ConfirmSoundRinger : MonoBehaviour

{

void Start()

{

Messenger.Instance.AddListener<ConfirmSoundMessage>(ProcessMessage);

}

private void ProcessMessage(ConfirmSoundMessage arg1)

{

PlayConfirmationSound();

}

private AudioSource _audioSource;

private void PlayConfirmationSound()

{

if (_audioSource == null)

{

_audioSource = GetComponent<AudioSource>();

}

if (_audioSource != null)

{

_audioSource.Play();

}

}

}

}Not quite rocket science. If a message of type ConfirmSoundMessage arrives, try to find an Audio Source. If found, play the sound. ConfirmSoundMessage is just an empty class with not properties or methods whatsoever – it’s a bare signal class.

SpokenFeedbackManager

Marginally more complex, but not a lot:

using HoloToolkit.Unity;

using HoloToolkitExtensions.Messaging;

using System.Collections.Generic;

using UnityEngine;

namespace HoloToolkitExtensions.Audio

{

public class SpokenFeedbackManager : MonoBehaviour

{

private Queue<string> _messages = new Queue<string>();

private void Start()

{

Messenger.Instance.AddListener<SpokenFeedbackMessage>(AddTextToQueue);

_ttsManager = GetComponent<TextToSpeech>();

}

private void AddTextToQueue(SpokenFeedbackMessage msg)

{

_messages.Enqueue(msg.Message);

}

private TextToSpeech _ttsManager;

private void Update()

{

SpeakText();

}

private void SpeakText()

{

if (_ttsManager != null && _messages.Count > 0)

{

if(!(_ttsManager.SpeechTextInQueue() || _ttsManager.IsSpeaking()))

{

_ttsManager.StartSpeaking(_messages.Dequeue());

}

}

}

}

}

If a SpokenFeedbackMessage comes in, it’s added to the queue. In the Update method, SpeakText is called, which first checks if there are any messages to process, then checks if the TextToSpeech is available – and if so, it pops the message out of the queue and actually speaks it. The queue has two functions. First, the message may come from a background thread, and by having SpeakText called from Update, it’s automatically transferred to the main loop. Second, it prevents messages being ‘overwritten’ before they are even spoken.

The trade-off of course is that you might stack up messages if the user quickly repeats an action, resulting in the user getting a lot of talk while the action is already over.

On the Count > 0 in stead of any – apparently you are to refrain from using LINQ extensively in Unity apps, as this is deemed inefficient. It hurts my eyes to see it used this way, but when in Rome…

Wiring it up

There is a script SpeechCommandExecuter sitting in Managers, next to a Speech Input Source and a Speech Input Handler, that is being called by the Speech Input Handler when you say “Test Command”. This is not quite rocket science, to put it mildly:

public class SpeechCommandExecuter : MonoBehaviour

{

public void ExecuteTestCommand()

{

Messenger.Instance.Broadcast(new ConfirmSoundMessage());

}

}As is the ButtonClick script, that’s attached to the ButtonPush:

using HoloToolkit.Unity.InputModule;

using HoloToolkitExtensions.Audio;

using HoloToolkitExtensions.Messaging;

using UnityEngine;

public class ButtonClick : MonoBehaviour, IInputClickHandler

{

public void OnInputClicked(InputClickedEventData eventData)

{

Messenger.Instance.Broadcast(

new SpokenFeedbackMessage { Message = "Thank you for pressing this button"});

}

}

The point of doing it like this

Anywhere you now have to give confirmation or feedback, you now just need to send a message – and you don’t have to worry about setting up an Audio Source, a Text To Speech and wiring that up correctly. Two reusable components take care of that. Typically, you would not send the conformation directly from the pushed button or the speech command – you would first validate if the command can be processed in the component that holds the logic, and then give confirmation or feedback from there.

Conclusion

I hope to have convinced you of the importance of feedback, and I showed you a simple and reusable way of implementing that. You can find the sample code, as always, on GitHub.