Intro

Both of my HoloLens apps in the store ask the user to identify some kind of horizontal space to put holograms on, in one case Schiphol Airport, in the other case a map of some part of the world. Now you can of course use Spatial Understanding, which is awesome and offers very advanced capabilities, but requires some initial activity by the user. Sometimes, you just want to identify the floor or some other horizontal place - if only to find out how long the user is. This the actual code I wrote for Walk the World and extracted it for you. In the demo project, it displays a white plane at floor level.

Setting the stage.

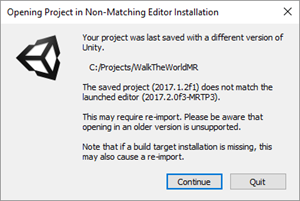

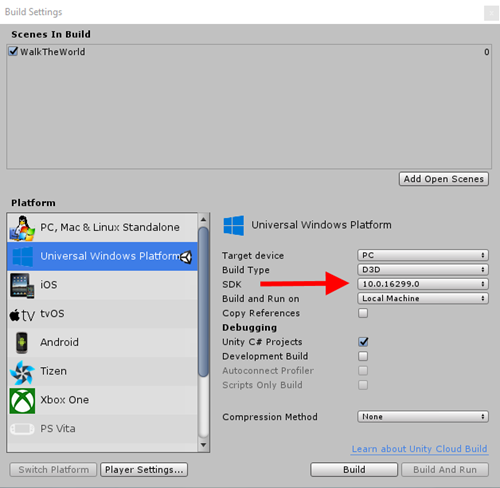

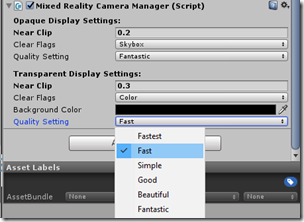

We start with creating and empty project. The we proceed with importing the newest Mixed Reality Toolkit. If you have done that, you will notice the extra menu option "HoloToolkit" no longer appears, but it now says "Mixed Reality Toolkit". It still has three settings, but one of them has profoundly changed: the setting "Apply Mixed Reality Scene Settings" basically adds everything you need to get started:

- A "MixedRealityCameraParent" - the replacement for the HoloLensCamera that encapsulates a camera that will work both on HoloLens and immersive headsets.

- A default cursor

- An input manager that processes input from gestures, controllers or other devices depending on whether your app runs on a HoloLens or an immersive headset

Now I tend to organize stuff a little bit different, so after I have set up the scene I have like this:

I make an empty game object "HologramCollection" that will hold my holograms, and all the standard or none-graphic I tend to chuck in a game object "Managers". Notice I also added SpatialMapping. If you click the other two options in the Mixed Reality Toolkit/Configure menu our basic app setup is now ready to go.

Some external stuff to get

Then we proceed to import LeanTween and Mobile Power Ups Vol Free 1 from the Unity Store. Both are free. Note – the latter one is deprecated, but the one arrow asset we need from it still is usable. If you can't get it from the store anymore, just nick it from my code ;)

Recurring guest appearances

We need some more stuff I wrote about before:

- The Messenger, although it’s internals have changed a little since my original article

- The KeepInViewController class to keep an object in view – a improved version of the MoveByGaze class about my post about a floating info screen

- The LookAtCamera class to keep an object oriented to the camera

Setting up the initial game objects

The end result should be this:

HologramCollection has three objects in it:

- A 3DTextPrefab "LookAtFloorText"

- An empty game object "ArrowHolder"

- A simple plane. This is the object we are going to project on the floor.

Inside the ArrowHolder we place two more objects:

- A 3DTextPrefab "ConfirmText"

- The "Arrows Green" prefab from the Mobile Power Ups Vol Free 1. That initially looks like on the right

So let's start at the top setting up our objects:

LookAtFloorText

- Z position is 1. So it will spawn 1 meter before you.

- Character size to 0.1

- Anchor to middle center

- Font size to 480

- Color to #00FF41FF (or any other color you like)

After that, we drag two components on it

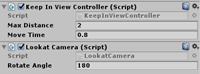

- KeepInViewController

- LookAtCamera

- Max Distance 2.0

- Move time 0.8

- Rotate Angle 180

This will keep the text at a maximum distance of 2 meters, or closer if you are looking at an object that is nearer, it will move in 0.8 seconds to a new position, and with an angle of 180 degrees it will always be readable to you.

ConfirmText

This is hardly worth it's own sub paragraph, but for the sake of completeness I show it's configuration.

Notice:

- Y = 0.27, Z is 0 here.

- This has only the LookAtCamera script attached to it, with the same settings. There is no LookAtCamera here.

Arrows Green

Arrows Green

This is the object nicked from Mobile Power Ups Vol Free 1.

- Y = 0.1, I moved it a little upwards so it will always appear just above the floor

- I rotated it over X so it will point to the floor.

- I added a HorizontalAnimator to it with a spin time of 2.5 seconds over an absolute (vertical) axis so it will slowly spin around.

Plane

The actual object we are going to show on the floor. It's a bit big (default size = 10x10 meters), so we are going to scale it down a little:

And now for some code.

The general idea

Basically, there are two classes doing the work, the rest only is 'support crew.

- FloorFinder actually looks for the floor, and controls a prompt object (LookAtFloorText) that prompts you - well, to look at the floor

- FloorConfirmer displays an object that shows you where the floor is find, and then waits for a method to be called that either accepts or reject the floor. In sample the app, this is done by speech commands.

- They both communicate by a simple messaging system

The message class is of course very simple:

using UnityEngine;

public class PositionFoundMessage

{

public Vector3 Location { get; }

public PositionFoundMessage(Vector3 location)

{

Location = location;

}

public PositionFoundStatus Status { get; set; }

}With an enum to go with it indicating where in the process we are

public enum PositionFoundStatus

{

Unprocessed,

Accepted,

Rejected

}

On startup, the FloorConfirmer hides it's confirmation object (the arrow with text). FloorFinder shows it's prompt object. When it detects a floor, it sends the position in a PositionFoundMessage message with status "Unprocessed". It also listens to PositionFoundMessages. If it receives one that has status "Unprocessed" (which is, in this sample, only sent by itself), it will disable itself and hide the prompt object (the text saying "Please look at the floor").

If the FloorConfirmer receives a PositionFoundMessage of status unprocessed "Unprocessed" it will show it's confirmation object on the location where the floor is detected. And then, as I wrote, it waits for it's Accept or Reject method being called. If Accept is called, it resends the PositionFoundMessage with status "Accepted" to anyone who might be interested - in this app, that's a simple class "ObjectDisplayer" that shows a game object that has been assigned to it on the correct height below the user's head. If the Reject method is called, FloorConfirmer resend the message as well - but with status Rejected. Which will wake up the FloorFinder again.

Finding the actual floor

using HoloToolkit.Unity.InputModule;

using HoloToolkitExtensions.Messaging;

using HoloToolkitExtensions.Utilities;

using UnityEngine;

public class FloorFinder : MonoBehaviour

{

public float MaxDistance = 3.0f;

public float MinHeight = 1.0f;

private Vector3? _foundPosition = null;

public GameObject LabelText;

private float _delayMoment;

void Start()

{

_delayMoment = Time.time + 2;

Messenger.Instance.AddListener<PositionFoundMessage>(ProcessMessage);

#if !UNITY_EDITOR

Reset();

#else

LabelText.SetActive(false);

#endif

}

void Update()

{

if (_foundPosition == null && Time.time > _delayMoment)

{

_foundPosition = LookingDirectionHelpers.GetPositionOnSpatialMap(MaxDistance,

GazeManager.Instance.Stabilizer);

if (_foundPosition != null)

{

if (GazeManager.Instance.Stabilizer.StablePosition.y -

_foundPosition.Value.y > MinHeight)

{

Messenger.Instance.Broadcast(

new PositionFoundMessage(_foundPosition.Value));

PlayConfirmationSound();

}

else

{

_foundPosition = null;

}

}

}

}

public void Reset()

{

_delayMoment = Time.time + 2;

_foundPosition = null;

if(LabelText!= null) LabelText.SetActive(true);

}

private void ProcessMessage(PositionFoundMessage message)

{

if (message.Status == PositionFoundStatus.Rejected)

{

Reset();

}

else

{

LabelText.SetActive(false);

}

}

private void PlayConfirmationSound()

{

Messenger.Instance.Broadcast(new ConfirmSoundMessage());

}

}

The Update method does all the work - if a position on the spatial map is found that's at least MinHeight below the user's head, then we might have found the floor, and we send out a message (with default status Unprocessed). The method below Update, ProcessMessage, actually gets that message too and hides the prompt text.

The helper method "GetPositionOnSpatialMap" in LookingDirectionHelpers simply tries to project a point on the spatial map at maximum distance along the viewing direction of the user. It's like drawing a line projecting from the users head ;)

public static Vector3? GetPositionOnSpatialMap(float maxDistance = 2,

BaseRayStabilizer stabilizer = null)

{

RaycastHit hitInfo;

var headReady = stabilizer != null

? stabilizer.StableRay

: new Ray(Camera.main.transform.position, Camera.main.transform.forward);

if (SpatialMappingManager.Instance != null &&

Physics.Raycast(headReady, out hitInfo, maxDistance,

SpatialMappingManager.Instance.LayerMask))

{

return hitInfo.point;

}

return null;

}Is this the floor we want?

using HoloToolkitExtensions.Messaging;

using UnityEngine;

public class FloorConfirmer : MonoBehaviour

{

private PositionFoundMessage _lastReceivedMessage;

public GameObject ConfirmObject;

// Use this for initialization

void Start()

{

Messenger.Instance.AddListener<PositionFoundMessage>(ProcessMessage);

Reset();

#if UNITY_EDITOR

_lastReceivedMessage = new PositionFoundMessage(new Vector3(0, -1.6f, 0));

ResendMessage(true);

#endif

}

public void Reset()

{

if(ConfirmObject != null) ConfirmObject.SetActive(false);

_lastReceivedMessage = null;

}

public void Accept()

{

ResendMessage(true);

}

public void Reject()

{

ResendMessage(false);

}

private void ResendMessage(bool accepted)

{

if (_lastReceivedMessage != null)

{

_lastReceivedMessage.Status = accepted ?

PositionFoundStatus.Accepted : PositionFoundStatus.Rejected;

Messenger.Instance.Broadcast(_lastReceivedMessage);

Reset();

if( !accepted) PlayConfirmationSound();

}

}

private void ProcessMessage(PositionFoundMessage message)

{

_lastReceivedMessage = message;

if (message.Status != PositionFoundStatus.Unprocessed)

{

Reset();

}

else

{

ConfirmObject.SetActive(true);

ConfirmObject.transform.position =

message.Location + Vector3.up * 0.05f;

}

}

private void PlayConfirmationSound()

{

Messenger.Instance.Broadcast(new ConfirmSoundMessage());

}

}

A rather simple class - it disables it's confirm object at startup. If it gets a PositionFoundMessage, two things might happen:

- If it's an Unprocessed message, it will activate it's confirm object (the arrow) and place it on the location provided inside the message (well, 5 cm above that).

- For any other PositionFoundMessage, it will deactivate itself and hide the confirm object

If the Accept method is called from outside, it will resend the message with status Accepted for any interested listener and deactivate itself. If the reject method is called, it will send the message with status Rejected - effectively deactivating itself too, but waking up the floor finder again.

And thus these two objects, the FloorFinder and the FloorConfirmer can work seamlessly together while having no knowlegde of each other whatsover.

The final basket

For anything to happen after a PositionFoundMessage with status Accepted is sent, there need to also be something that actually receives it, and acts upon it. I places the game object it's attached to the same vertical position as the point it received - that is, 5 cm above it. It's not advisable to do it at the exact vertical floor position, as stuff might disappear under the floor.I have found horizontal planes are never smooth or, indeed - actually horizontal.

using HoloToolkitExtensions.Messaging;

using UnityEngine;

public class ObjectDisplayer : MonoBehaviour

{

void Start()

{

Messenger.Instance.AddListener<PositionFoundMessage>(ShowObject);

#if !UNITY_EDITOR

gameObject.SetActive(false);

#endif

}

private void ShowObject(PositionFoundMessage m)

{

if (m.Status == PositionFoundStatus.Accepted)

{

transform.position = new Vector3(transform.position.x, m.Location.y,

transform.parent.transform.position.z) + Vector3.up * 0.05f;

if (!gameObject.activeSelf)

{

gameObject.SetActive(true);

}

}

}

}

Wiring it all together

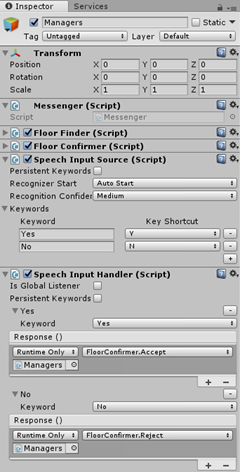

FloorFinder and FloorConfirmer sit together in the Managers object, but there's more stuff in the to tie all the knots:

- The Messenger, for if there are messages to be sent, there should also be something to send it around

- A Speech Input Source and a Speech Input Handler. Notice the last one calls FloorConfirmer's Accept method on "Yes", and the Reject method upon no.

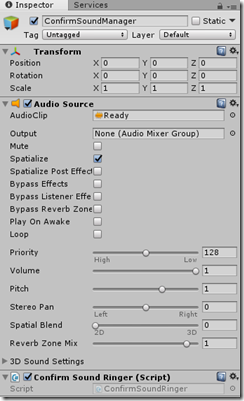

Adding some sound

If yo

using HoloToolkitExtensions.Messaging;

using UnityEngine;

public class ConfirmSoundRinger : MonoBehaviour

{

void Start()

{

Messenger.Instance.AddListener<ConfirmSoundMessage>(ProcessMessage);

}

private void ProcessMessage(ConfirmSoundMessage arg1)

{

PlayConfirmationSound();

}

private AudioSource _audioSource;

private void PlayConfirmationSound()

{

if (_audioSource == null)

{

_audioSource = GetComponent<AudioSource>();

}

if (_audioSource != null)

{

_audioSource.Play();

}

}

}Conclusion

And that's it. Simply stare at a place below your head (default at least 1 meter), say "Yes" and the white plane will appear exactly on the ground. Or, as I explained, a little above it. Replace the plane by your object of choice and you are good to go, without using Spatial Understanding over complex code.

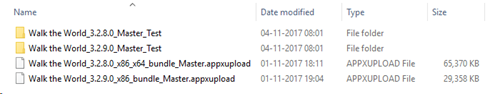

As usual, demo code can be found on GitHub.